In the competitive remote job market for data professionals, showing your foundational skills is essential. Strong data modeling is not just a technical skill. It is a strategic asset that proves you can build scalable, efficient, and reliable systems that drive business decisions. Hiring managers want experts who understand the "why" behind data structure, not just someone who can write a query. Getting this right on your resume and in interviews can be the difference between getting an offer and being overlooked.

This guide breaks down the essential data modeling best practices that top companies look for. We will provide practical examples you can add to your projects, discuss in interviews, and use to frame your resume accomplishments. Mastering these concepts is crucial, but so is finding the right opportunities. For those looking to use their data modeling skills, understanding effective strategies for landing remote software engineer jobs and related data roles is key.

Our goal is to give you a clear roadmap to demonstrate the architectural thinking that secures top tier remote and hybrid roles, helping you get hired faster. You will learn not only what to do but how to articulate its business value, a critical skill for any data professional. Let's start building a stronger foundation for your career.

1. Normalize Your Data Structure

Data normalization is a core practice that organizes a database to reduce data redundancy and improve data integrity. The main idea is simple: store each piece of data in only one place. By structuring data into logical tables with clear relationships, you prevent common errors that can corrupt your dataset over time.

For data professionals building scalable systems, normalization is non negotiable. Imagine a job board database. Without normalization, a company's name and address might be repeated for every job posting. If the company moves, you would have to update its address in hundreds of rows, risking errors. Normalization solves this by storing company information in a separate Companies table and linking it to a Job_Postings table via a company_id.

Key Takeaway: Normalization is not just about saving space. It is a critical strategy for maintaining data accuracy and making your database easier to manage as business needs change. On your resume, this translates to building "reliable" and "maintainable" data systems.

How to Implement Normalization on Your Projects

Your goal is to organize data to meet specific "normal forms," with Third Normal Form (3NF) being the standard for most transactional databases.

- Start with 3NF: This form ensures that all columns in a table depend only on the primary key, which eliminates most common data issues. Building one of these SQL projects for your resume is a great way to practice normalization.

- Use ERDs: Use Entity Relationship Diagrams (ERDs) to map out your tables, primary keys, foreign keys, and their relationships. This visual blueprint makes complex structures easy to understand.

- Balance with Performance: Highly normalized schemas can sometimes lead to complex queries with many joins, which can slow down performance. For analytics or reporting, you may selectively denormalize certain tables to optimize query speed.

2. Use a Dimensional Modeling Approach (Star Schema)

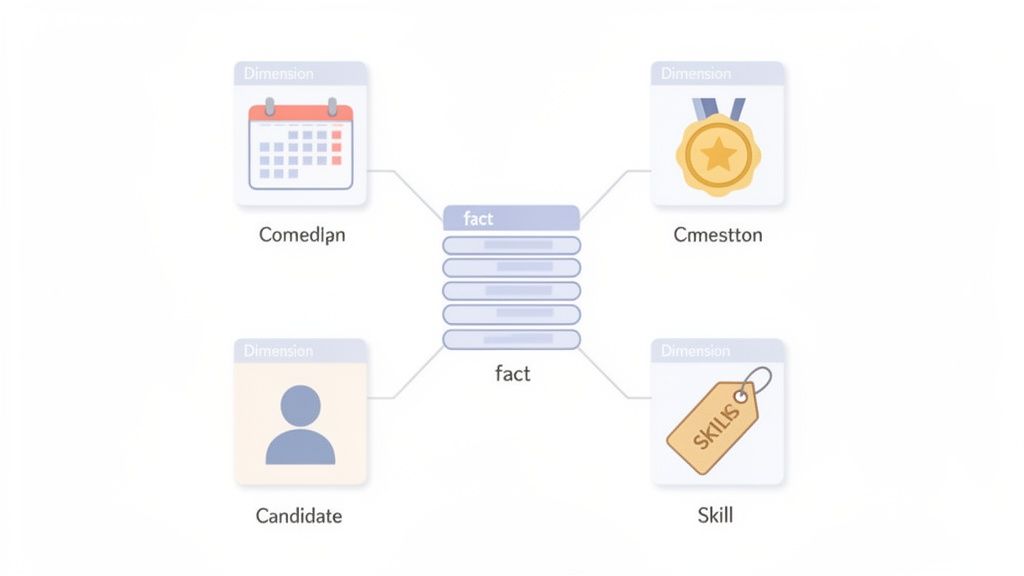

While normalization is key for transactional systems, dimensional modeling is the standard for analytics and business intelligence. This approach organizes data into fact tables (containing business events or metrics) and dimension tables (containing descriptive context). This structure, often called a star schema, is optimized for fast and intuitive querying, making it a critical skill for any analytics role.

For data professionals who need to find insights in large datasets, dimensional modeling is essential. Consider an analytics database at a company like Jobsolv. A fact table might store job application events. This fact table would be surrounded by dimension tables for Candidates, Companies, and Locations, allowing analysts to easily answer questions like, "How many data scientist roles were applied for in Austin last quarter?"

Key Takeaway: Dimensional modeling prioritizes query performance and makes data easy for business users to understand. This makes it the ideal choice for data warehouses and analytics platforms where reading data is more common than writing it.

How to Implement a Star Schema

Your goal is to build a model that reflects business processes and makes it simple for analysts to explore data.

- Identify Business Processes: Start by defining the core business events you need to measure, such as job applications or user clicks. These events become your fact tables.

- Define Descriptive Dimensions: For each fact table, identify the "who, what, where, when, and why." These become your dimension tables. For a job application fact, dimensions would include Candidate, Company, Job Posting, and Date.

- Use Surrogate Keys: Use simple integer based keys as the primary keys in your dimension tables. These keys are then used as foreign keys in the fact table to improve join performance.

- Plan for Historical Tracking: Use Slowly Changing Dimensions (SCDs) to manage how your model handles changes over time, ensuring historical reporting remains accurate. For example, if a candidate moves to a new city, an SCD strategy preserves their old location for past analyses.

3. Define Clear Data Relationships

Defining clear relationships between data tables is a cornerstone of effective data modeling. This practice involves mapping how tables connect to one another, including their cardinality (one to one, one to many, many to many). By enforcing these rules at the database level, you ensure data integrity, prevent orphaned records, and create a logical structure that reflects real business rules.

For data professionals, this is a non negotiable step. Consider a resume parsing system. Without clear relationships, a Skills table might not properly link to a Resumes table. This could lead to a situation where deleting a resume leaves behind useless skill tags. Clear relationships, established with foreign keys, prevent these issues.

Key Takeaway: Data relationships provide structure and ensure that related information behaves correctly. This is critical for maintaining accuracy and consistency, a key skill recruiters look for.

How to Implement Clear Relationships

Your goal is to build a schema where the connections between tables are enforced and easy to understand.

- Visualize with ERDs: Use an Entity Relationship Diagram (ERD) as your blueprint. Visually map out each table and draw lines to represent the relationships. Clearly label the cardinality (e.g., 1 to ∞) on these lines.

- Enforce with Foreign Keys: A foreign key in one table that points to a primary key in another is the main way to enforce a relationship. Consistently using these constraints is a fundamental data modeling best practice.

- Define Cascade Behaviors: Decide what happens when a referenced record is updated or deleted. Clearly define actions like

CASCADE(delete dependent rows) orSET NULL(set foreign keys to null). This prevents orphaned records and maintains relational integrity.

4. Use Descriptive Naming Conventions

Consistent and clear naming conventions are a simple but powerful data modeling practice. This involves establishing a standard way to name tables, columns, and other database objects. When names are intuitive and predictable, you reduce confusion and make collaboration across teams much smoother.

For data teams, ambiguous names can lead to critical errors. A column named exp could mean "experience in years" or "expiration date." A descriptive name like years_of_experience removes all doubt. This clarity is essential for building scalable and maintainable data models that your future teammates can easily understand.

Key Takeaway: Good naming conventions are an investment in long term maintainability. They reduce development time and make your data assets easier for both people and machines to understand.

How to Implement Naming Conventions

Your goal is to create a documented standard that is logical, consistent, and aligned with business terms.

- Establish a Style Guide: Before you design your schema, create a simple guide outlining your naming rules. A common and readable standard is

lowercase_with_underscores(snake case) for tables and columns. For example, usejob_postingsinstead ofJobPostings. - Be Descriptive, Not Cryptic: Always choose clarity over brevity. Instead of

usr_id, useuser_id. Instead ofapp_dt, useapplication_date. This makes your SQL queries self documenting and easier to debug. - Use Prefixes for Clarity: Group related fields logically with common prefixes. For instance, columns could be named

application_id,application_status, andapplication_source. This instantly tells you which entity the column describes.

5. Use Data Validation and Quality Constraints

Data validation and quality constraints are your first line of defense for data integrity. By defining rules directly within the database schema, you ensure that only clean, valid, and complete data enters your system. This approach prevents data quality issues at the source, rather than trying to fix them later with costly cleanup processes.

For a platform like Jobsolv, this is essential. Imagine a Candidates table where an email address is optional or a Job_Postings table where the salary could be a negative number. This invalid data would break application tracking and erode user trust. By using constraints like NOT NULL, UNIQUE, and CHECK, you enforce business rules at the lowest level.

Key Takeaway: Data constraints are not just database rules. They are the encoded business logic that protects your application from "Garbage In, Garbage Out," ensuring operational reliability and analytical accuracy.

How to Implement Data Validation

Your goal is to build a self defending data model where the database itself rejects invalid data.

- Define Critical Fields with

NOT NULL: Identify columns that are essential for a record to be meaningful. For instance, a job application record is useless without acandidate_idandjob_posting_id. - Enforce Business Logic with

CHECKConstraints: UseCHECKconstraints to validate data against specific business rules. For example, in aJob_Postingstable, you could use a constraint likeCHECK (salary_max >= salary_min). - Guarantee Uniqueness: Apply

UNIQUEconstraints to columns that must not contain duplicate values, such asemailin aUserstable. This prevents duplicate records. You can build a data pipeline to manage and validate this information effectively.

6. Design for Scalability and Performance

A data model that works for a thousand records can fail at a million. Designing for scalability means building your database schema with future growth in mind. This involves anticipating how data will be accessed and using structures like indexes and partitions to ensure query performance remains fast as data volume grows.

For a platform like Jobsolv, which manages over 100,000 job postings, a scalable design is a necessity. Without it, features like job filtering would become progressively slower, creating a poor user experience. Proactive performance design prevents the need for costly database refactoring down the line.

Key Takeaway: Scalability is about making intelligent design choices that accommodate growth without requiring a complete architectural overhaul. This skill shows hiring managers you think long term.

How to Implement Scalable Design

Building a high performance data model involves a strategic combination of indexing, partitioning, and monitoring.

- Analyze Access Patterns: Before creating indexes, understand how your application will query the data. For Jobsolv, common queries might filter jobs by

job_category,location, andexperience_level. A composite index on these columns would dramatically speed up searches. - Implement Strategic Partitioning: Break down massive tables into smaller pieces. A common strategy is to partition time series data, like an

applicationstable, by month or year. This allows the database to scan only the relevant partition instead of the entire table. - Use Query Execution Plans: Use database tools like

EXPLAINto analyze how your queries are running. These plans reveal performance bottlenecks, such as full table scans, and show if your indexes are being used effectively. - Consider Read Replicas: For systems with heavy read traffic, like analytics dashboards, use read replicas. This separates analytical workloads from your main transactional database, ensuring reports do not slow down the primary application.

7. Maintain a Single Source of Truth

Establishing a Single Source of Truth (SSOT) is a critical practice that ensures all users and systems rely on one authoritative data source. This approach prevents the chaos that arises when multiple, contradictory versions of the same information exist. By defining a "golden record" for core business entities, you create a foundation of trust and reliability.

For Jobsolv, this principle is essential. Imagine a candidate updates their profile with a new certification, but an older resume file still lists their old skills. This conflict could harm their job application chances. An SSOT model solves this by making the candidate's profile the master record. All other outputs are derived from this single source, ensuring consistency.

Key Takeaway: A Single Source of Truth is a strategic framework that guarantees data consistency, builds trust among users, and empowers reliable decision making across the business.

How to Implement a Single Source of Truth

Building an SSOT requires both technical implementation and strong governance.

- Define Master Entities: Identify your core business entities that need to be managed as master data. These are the high value nouns of your business, such as

Customers,Products, orCandidates. - Establish Data Governance: Create clear rules and assign ownership for who can create, update, or delete master data. Implement audit trails to log every change, providing a transparent history of who changed what and when.

- Synchronize Updates: Use technologies like Change Data Capture (CDC) to efficiently detect changes in the master source and send them to all downstream systems. This ensures analytical databases and dashboards always have the most current information.

8. Design for Historical Tracking and Auditability

Effective data modeling is not just about capturing the current state of your data. It is also about understanding its past. Designing for historical tracking involves creating a data structure that records how data changes over time. This is essential for audits, compliance, and deriving deeper business insights. It ensures you can answer questions like, "What was a customer's address last year?".

For a remote job board, this is crucial. Without historical tracking, you lose valuable context. For example, tracking changes to a user's resume shows their skill progression. Monitoring updates to a job posting reveals how hiring needs evolve. Building this capability into your data model from the start creates a powerful analytical asset.

Key Takeaway: A data model without historical tracking is like a book with only the last page. It tells you where you are, but not how you got there. Building in auditability turns your database into a valuable historical record.

How to Implement Historical Tracking

This often involves using techniques like Slowly Changing Dimensions (SCDs), a concept from data warehousing.

- Implement SCD Type 2: For dimensions where you need to preserve history, use the SCD Type 2 method. Instead of overwriting old data, you add a new row for each change. Each row is managed with columns like

valid_from,valid_to, and a flag likeis_current. - Create Dedicated Audit Tables: For high stakes data like financial transactions, use database triggers to automatically populate separate audit tables. These tables should log the old value, new value, the user who made the change, and a timestamp.

- Use Surrogate Keys: Rely on system generated surrogate keys (e.g.,

user_sk) instead of natural business keys (e.g.,email_address). This prevents issues when a business key changes, allowing you to maintain a consistent link to historical records.

9. Implement Proper Access Control and Data Security

Data security is not an afterthought. It is an integral part of data modeling that must be designed from the start. This involves building security measures directly into your schema to protect sensitive information, ensure compliance with privacy regulations like GDPR, and control who can see or change specific data. A secure model prevents data breaches and builds user trust.

For Jobsolv, this is non negotiable. Protecting candidate personal information and salary data is paramount. By designing a schema with row level security, encryption, and strict permission controls, you ensure that candidates can only see their own applications and employers only see the data they are authorized to view.

Key Takeaway: Building security into the data model is far more effective than adding it on later. A secure by design approach makes compliance easier and protects your company's most valuable asset: its data.

How to Implement Data Security in Your Model

Start by classifying your data and applying controls based on sensitivity. Your goal is to enforce the principle of least privilege, where users can only access the minimum data required for their functions.

- Classify Data by Sensitivity: Categorize data fields into levels such as public, internal, or restricted. For example, a candidate's email is restricted, while a job title is public.

- Encrypt Sensitive Data at Rest: Use database features like Transparent Data Encryption (TDE) to encrypt personally identifiable information (PII) such as names and addresses. This protects data even if the storage is compromised.

- Implement Role Based and Row Level Security: Define clear access policies based on user roles (e.g., candidate, recruiter, admin). Use row level security to ensure users can only see records relevant to them, like a job seeker viewing only their own application history.

10. Document Your Data Model and Plan for Integration

A data model without documentation is a black box. Creating a comprehensive data dictionary and metadata layer is a critical practice that ensures your data assets are understandable and usable. This documentation serves as the user manual for your data, detailing everything from column definitions and business logic to transformation rules.

For data professionals, this clarity is a career accelerator. Imagine trying to integrate a new job board API without a clear data dictionary. The project becomes a high risk exercise in guesswork. Proper documentation provides a clear roadmap, speeds up developer onboarding, and enables seamless system integrations. To ensure your models are well understood, creating thorough documentation is crucial, much like reviewing process documentation examples can inspire clarity.

Key Takeaway: Documentation is an integral part of the data modeling process that transforms a complex schema into a valuable and accessible organizational asset.

How to Implement Documentation and Integration Planning

Your goal is to create a living repository of knowledge about your data that both people and systems can rely on.

- Build a Detailed Data Dictionary: For every table and column, document its business purpose, data type, constraints (e.g., NOT NULL), and example values. Include metadata like

update_frequencyanddata_owner. - Design for Integration: Do not build your model in a vacuum. Design schemas with common integration standards in mind. Create abstraction layers that separate your internal data format from the formats required by external partners, making your system more resilient to changes.

- Use Data Catalog Tools: For larger environments, manual documentation is not scalable. Use data catalog tools like Collibra, Alation, or open source options like Apache Atlas to automate metadata discovery and provide a central, searchable hub for all data knowledge.

Turn Best Practices Into Your Next Job Offer

We have covered ten foundational data modeling best practices, from normalization to designing for scalability and security. Each principle is more than just a technical guideline. These are the core skills that separate a good data professional from a great one.

Mastering these concepts is your ticket to building robust and reliable data systems. More importantly, in a competitive job market, it is how you show undeniable value to potential employers. You are not just organizing data. you are building the strategic asset that powers an entire organization’s decision making.

From Theory to a Better Resume

The real power of understanding these practices lies in your ability to explain their impact. Hiring managers are searching for candidates who can connect technical actions to business outcomes.

Instead of a generic resume bullet point, frame your work using these principles:

Before: "Worked on database design."

After: "Redesigned the customer database using 3NF normalization, which eliminated data redundancy by 35% and improved reporting query speeds by 50%."

Before: "Created data models for the analytics team."

After: "Implemented a dimensional star schema for sales data, enabling the BI team to build self service dashboards in Power BI and reducing report generation time from hours to minutes."

This "after" version does more than state a task. It showcases your expertise, quantifies your impact, and proves you understand why data modeling best practices matter. It is the kind of language that gets noticed by Applicant Tracking Systems (ATS) and hiring managers.

Actionable Steps to Get Hired Faster

Your next step is to translate this knowledge into a powerful career story.

- Review Your Portfolio: Revisit a past project. Can you identify where you applied normalization or used a star schema? Update your project descriptions to highlight these specific techniques and their results.

- Start a New Project: If you need more experience, start a personal project. Find a public dataset and build a data model from scratch. Document your process. This becomes a powerful example to share in interviews.

- Optimize Your Resume: Go through your resume line by line. Find every opportunity to replace vague descriptions with specific, impact driven statements that feature these data modeling practices. Use keywords like "normalization," "dimensional modeling," "data integrity," and "schema design" to ensure your resume passes ATS scans.

By internalizing these principles, you equip yourself with the skills and the vocabulary to stand out, command higher level interviews, and secure your next remote data analytics role with confidence.

Ready to translate your advanced data modeling skills into an interview winning resume? The Jobsolv free ATS approved resume builder is designed to help you highlight these technical achievements effectively. Our tools help you craft and tailor a resume that proves your expertise, getting you noticed for the 100K+ remote and hybrid data roles on our job board.

.svg)